The Google Brain has recently created two AI’s that have evolved their very own cryptographic algorithm to protect their messages of communication from a third artificial intelligence, which is evolving it’s own method to crack the AI-generated cryptographic algorithm. Finally the study was a great success as the first two AIs learnt to communicate securely from scratch on their own.

The Brain team of Google started this work with three vanilla neural networks with specific goals and named them Alice, Bob, and Eve. The purpose of Alice is to send a secure message to Bob; Bob had to decrypt and understand it; meanwhile the purpose of Eve is to spy on their message thread and decrypt it. The first two AI’s Alice and Bob have one advantage over Eve: they start with a shared secret key (i.e. this is symmetric encryption).

They are not taught how to use encryption!

It is really important to note that the AIs were not told how to encrypt stuff, or what crypto techniques to use: they were just given a loss function (a failure condition), and then they got on with it. In Eve’s case, the loss function was very simple: the distance, measured in correct and incorrect bits, between Alice’s original input plaintext and its guess. For Alice and Bob the loss function was a bit more complex: if Bob’s guess (again measured in bits) was too far from the original input plaintext, it was a loss; for Alice, if Eve’s guesses are better than random guessing, it’s a loss. And thus an adversarial generative network (GAN) was created.

Alice, Bob, and Eve all shared the same “mix and transform” neural network architecture, but they were initialised independently and had no connection other Alice and Bob’s shared key. For Alice the key and plaintext are input into the first layer of the neural network; for Bob the key and the ciphertext were input; and for Eve, she got just the ciphertext. The first layer is fully-connected, so the text and key can mix about. Following the first layer there are a number of convolutional layers, which learn to apply a function to the bits that were handed to it by the previous layer. They don’t know what that function might be; they just learn as they go along. For Alice, the final layer spits out some ciphertext; Bob and Eve output what they hope is the plaintext.

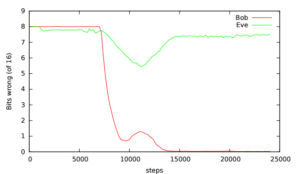

The results were… a mixed bag. Some runs were a complete flop, with Bob never able to reconstruct Alice’s messages. Most of the time, Alice and Bob did manage to evolve a system where they could communicate with very few errors. In some tests, Eve showed an improvement over random guessing, but Alice and Bob then usually responded by improving their cryptography technique until Eve had no chance (see graph).