According to a Statista report, there are 1.76 billion websites and the number is only increasing. This leads to a cluster of information and it becomes daunting to get relevant data specific to your needs.

This is where web scraping comes in handy. But scraping the web using your IP address can get you blocked from several crucial sites. To mitigate this, you need an IP proxy. However, as with many skills, it can be hard to use this technology as a beginner.

IMAGE CREDIT: OXYLABS

In this article, we will discuss the basics of web scraping using proxies. But before we get into the nitty-gritty, let’s understand IP addresses and how they work.

What is an IP address?

An IP address is a unique numerical code assigned to any device connected to the internet. For example, 207.141.125. It helps identify your device. When you make a request to Forbes, its servers can see your IP address.

What is a proxy?

It is a third-party server that allows you to make your request via their servers, hence using their IP address. As such, Forbes will not see your IP address but theirs. This allows for safe web scraping. In fact, they do not realize that it’s a proxy request, rather a normal request.

Why use proxies when web scraping?

Ideally, there are two main benefits of using proxies:

- Masking your IP address – you essentially use the IP address of the proxy server.

- Surpassing target website’s rate limit – websites are able to detect and block IP addresses that make numerous suspicious requests. In order to get around the rate limit, you should use pool proxy (many proxies) to split the amount of traffic to the target website.

Other benefits include:

- It enables you to make geo-specific requests. If you want to view TV programs of a specific area or products that are not available in your location, you can make a web request using proxy servers of that location.

- You are able to make numerous concurrent requests to a single website.

- Enables you to make requests without the fear of your spider getting blocked.

Which proxy options do you have?

Choosing the ideal proxy solution can be daunting for a beginner. In this section, we will highlight the three main options so that you can make an informed decision. So, let’s cut to the chase.

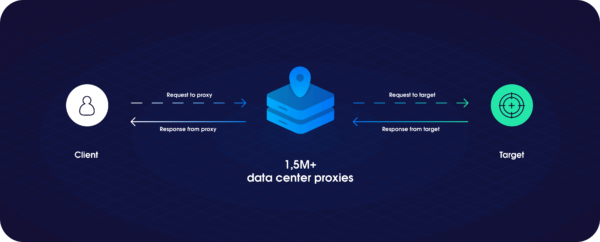

Data Centers IPs are the most common proxy options for web scrapers. They are cheap and readily available. The IPs of these servers are located in data centers that are all over the globe from Asia to North America. We recommend using this solution as a beginner owing to its ease of use and cheapness. Here are some of the best datacenter proxies for web scraping.

- Residential IPs

They allow you to make requests through a residential network. Although reliant, they are hard to get and overly expensive. Besides, why use them while there is a cheaper option – datacenter IPs? That said, they can allow you to view data that is only accessible via proxies.

- Mobile IPs

Finally, mobile IPs are used when scraping the web for mobile-oriented results. However, they are hard to find and also expensive. Besides, you might get yourself in trouble since the owner of the mobile device is not aware that you are using his/her IP address.

So which should you use? Well, the datacenter IPs are the most ideal. While mobile IPs can present your valuable mobile-user data, they can get you and the mobile user in trouble.

How do you manage your pool of proxies?

Depending on the scale of your web scraping, you can use 10 to 1,000s of proxies. While managing 10 can be a piece of cake, 1000 can be a pipe dream. Fortunately, there are various ways to get around this.

- Proxy rotators

A proxy rotator will assign a unique IP address from the proxy pool for each request. As such, you can launch numerous requests concurrently. Simply put, they manage your requests while you go about other tasks such as throttling. Check out the best rotating proxies for web scraping here.

- Outsource

You can hire companies or solutions to manage your proxy management. Some of the most common are Crawlera, Apify, Scrapy Cloud, and Octoparse, among others. They manage proxy rotation, session management, etc.

- DIY (Do It Yourself)

Once you purchase a pool of proxies, you can build a proxy management solution to run challenges such as throttling. Although cheap to develop, it is time and resource consuming. We don’t recommend this route.

Final say

Web scraping is an innovative and disruptive way to gain information that is vital for business growth, especially in the data-driven economy. Proxies allow you to scrape the web without fear of getting banned.