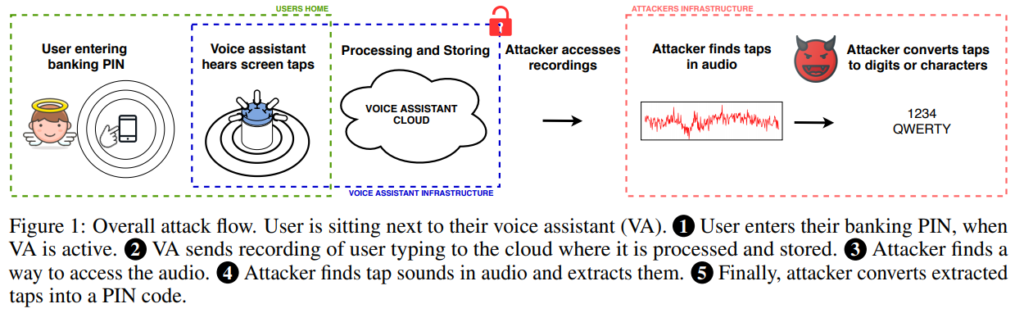

A team of researchers from the University of Cambridge, UK, has discovered how smart voice assistants can cause data leak. They noticed that voice assistants can record the texts typed on smartphones within close proximity of the smart speakers. An adversary can process these audio recordings to retrieve the texts and steal data.

Briefly, the attack becomes possible due to how the voice assistants behave.

As the researchers explained, almost every voice assistant, like Alexa, Echo, or Google Assistant, includes multiple microphones. These microphones are very sensitive, and often directional too. Hence, they can record sounds that we otherwise don’t or can’t hear easily. For instance, the sound of typing on virtual keyboards on smartphones.

Whereas, these microphones are also dangerous since they remain in an ‘always-on’ state. Although, the assistants activate only with their respect trigger keywords, like “Alexa”, or “Hey Google”, they remain active and record audios all the time. This functionality helps them to activate immediately when called.

However, their continuous recording of sounds is also a privacy breach for the users. Especially, considering that many studies have demonstrated how perpetrators can process sounds to steal sensitive data.

Stealing Texts Typed On Smartphones Via Voice Assistants

Although, the microphones on the smartphone devices are also vulnerable. But exploiting them requires access to a particular target phone. However, in the case of microphones present in smart speakers, an adversary can spy on multiple devices.

As the researchers stated in their paper,

In this work, we show that attacks on virtual keyboards do not necessarily need to assume access to the device, and can actually be performed with external microphones. For example, we show how keytaps performed on a smartphone can be reconstructed by nearby smart speakers.

In their study, the researchers demonstrated that by exploiting a smart speaker, an attacker can steal the recordings obtained through the speakers’ microphone. Then processing and reconstructing these recordings allows deciphering what a user typed on a smartphone by detecting the tap locations.

While this detection worked somewhat differently in the case of numbers and letters, it still gave pretty accurate results. Whereas, the attack could work at a distance of up to half a meter from the smart speaker.

As stated in the paper,

Given just 10 guesses, 5-digit PINs can be found up to 15 % of the time, and text can be reconstructed with 50 % accuracy.

Source: Zarandy et al.

For their experiment, the researchers used a Raspberry Pi with a ReSpeaker 6-mic circular array. Whereas, the victim devices included HTC Nexus 9 tablet, a Nokia 5.1 smartphone, and a Huawei Mate20 Pro smartphone. The Pi also had a WiFi setup on it to allow other devices to connect to it.

The researchers have shared the details about their findings in a research paper.

Limitations and Countermeasures

While the attack method sounds easy to execute, it has some limitations as well, the major one being tap detection.

This limitation is relatively less effective in the case of internal microphones. However, for external microphones, it’s a major hurdle as the environment can increase false positives.

Likewise, for detecting texts, the struggle of correct tap detection together with large dictionaries, the attack becomes quite impractical for a real-world exploit.

As for countermeasures, the use of screen protectors and phone cases alter tap acoustics. Thus, they provide partial protection against this attack.

Whereas, the researchers also advise smartphone makers to introduce “silent tap-like sounds” that trigger upon opening keyboards. Such sounds would also increase false positives, thereby mitigating this attack.